What Data Scientists Learned by Modeling the Spread of Covid-19

Models of the disease have become more complex, but are still only as good as the assumptions at their core and the data that feed them

published : 10 February 2024

In March 2020, as the spread of Covid-19 sent shockwaves around the nation, integrative biologist Lauren Ancel Meyers gave a virtual presentation to the press about her findings. In talking about how the disease could devastate local hospitals, she pointed to a graph where the steepest red curve on it was labeled: “no social distancing.” Hospitals in the Austin, Texas, area would be overwhelmed, she explained, if residents didn’t reduce their interactions outside their household by 90 percent.

Meyers, who models diseases to understand how they spread and what strategies mitigate them, had been nervous about appearing in a public event —and even declined the invitation at first. Her team at the University of Texas at Austin had just joined the city of Austin’s task force on Covid and didn’t know how, exactly, their models of Covid would be used. Moreover, because of the rapidly evolving emergency, her findings hadn’t been vetted in the usual way.

“We were confident in our analyses but had never gone public with model projections that had not been through substantial internal validation and peer review,” she writes in an e-mail. Ultimately, she decided the public needed clear communication about the science behind the new stay-at-home order in and around Austin.

The Covid-19 pandemic sparked a new era of disease modeling, one in which graphs once relegated to the pages of scientific journals graced the front pages of major news websites on a daily basis. Data scientists like Meyers were thrust into the public limelight—like meteorologists forecasting hurricanes for the first time on live television. They knew expectations were high, but that they could not perfectly predict the future. All they could do was use math and data as guides to guess at what the next day would bring.

As more of the United States’ population becomes fully vaccinated and the nation approaches a sense of pre-pandemic normal, disease modelers have the opportunity to look back on the last year-and-a-half in terms of what went well and what didn’t. With so much unknown at the outset—such as how likely is an individual to transmit Covid under different circumstances, and how fatal is it in different age groups—it’s no surprise that forecasts sometimes missed the mark, particularly in mid-2020. Models improved as more data became available on not just disease spread and mortality, but also on how human behavior sometimes differed from official public health mandates.

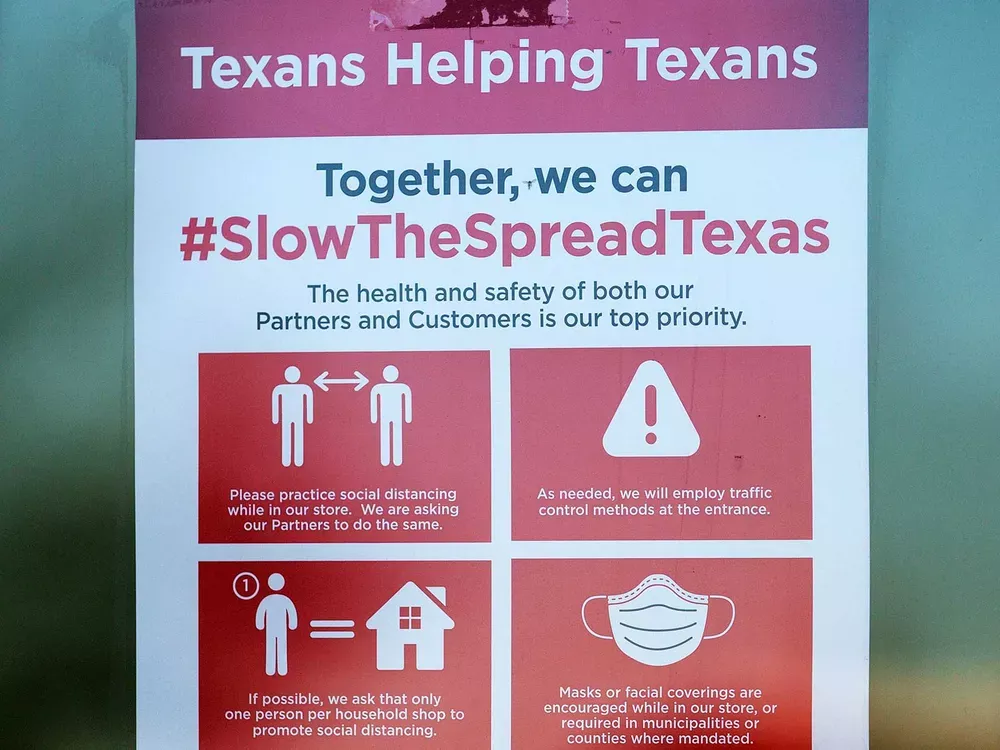

Modelers have had to play whack-a-mole with challenges they didn’t originally anticipate. Data scientists didn’t factor in that some individuals would misinterpret or outright ignore the advice of public health authorities, or that different localities would make varying decisions regarding social-distancing, mask-wearing and other mitigation strategies. These ever-changing variables, as well as underreported data on infections, hospitalizations and deaths, led models to miscalculate certain trends.

“Basically, Covid threw everything at us at once, and the modeling has required extensive efforts unlike other diseases,” writes Ali Mokdad, professor at the Institute for Health Metrics and Evaluation, IHME, at the University of Washington, in an e-mail.

Still, Meyers considers this a “golden age” in terms of technological innovation for disease modeling. While no one invented a new branch of math to track Covid, disease models have become more complex and adaptable to a multitude of changing circumstances. And as the quality and amount of data researchers could access improved, so did their models.

At the heart of Meyers’ group’s models of Covid dynamics, which they run in collaboration with the Texas Advanced Computing Center, are differential equations—essentially, math that describes a system that is constantly changing. Each equation corresponds to a state that an individual could be in, such as an age group, risk level for severe disease, whether they are vaccinated or not and how those variables might change over time. The model then runs these equations as they relate to the likelihood of getting Covid in particular communities.

Differential equations have been around for centuries, and the approach of dividing a population into groups who are “susceptible,” “infected,” and “recovered” dates back to 1927. This is the basis for one popular kind of Covid model, which tries to simulate the spread of the disease based on assumptions about how many people an individual is likely to infect.

But Covid demanded that data scientists make their existing toolboxes a lot more complex. For example, Shaman and colleagues created a meta-population model that included 375 locations linked by travel patterns between them.

Using information from all of those cities, “We were able to estimate accurately undocumented infection rates, the contagiousness of those undocumented infections, and the fact that pre-symptomatic shedding was taking place, all in one fell swoop, back in the end of January last year,” he says.

The IHME modeling began originally to help University of Washington hospitals prepare for a surge in the state, and quickly expanded to model Covid cases and deaths around the world. In the spring of 2020, they launched an interactive website that included projections as well as a tool called “hospital resource use,” showing at the U.S. state level how many hospital beds, and separately ICU beds, would be needed to meet the projected demand. Mokdad says many countries have used the IHME data to inform their Covid-related restrictions, prepare for disease surges and expand their hospital beds.

As the accuracy and abundance of data improved over the course of the pandemic, models attempting to describe what was going on got better, too.

In April and May of 2020 IHME predicted that Covid case numbers and deaths would continue declining. In fact, the Trump White House Council of Economic Advisers referenced IHME’s projections of mortality in showcasing economic adviser Kevin Hassett’s “cubic fit” curve, which predicted a much steeper drop-off in deaths than IHME did. Hassett’s model, based on a mathematical function, was widely ridiculed at the time, as it had no basis in epidemiology.

But IHME’s projections of a summertime decline didn’t hold up, either. Instead, the U.S. continued to see high rates of infections and deaths, with a spike in July and August.

Mokdad notes that at that time, IHME didn’t have data about mask use and mobility; instead, they had information about state mandates. They also learned over time that state-based restrictions did not necessarily predict behavior; there was significant variation in terms of adhering to protocols like social-distancing across states. The IHME models have improved because data has improved.

Better data is having tangible impacts. At the Centers for Disease Control and Prevention, Michael Johansson, who is leading the Covid-19 modeling team, noted an advance in hospitalization forecasts after state-level hospitalization data became publicly available in late 2020. In mid-November, the CDC gave all potential modeling groups the goal of forecasting the number of Covid-positive hospital admissions, and the common dataset put them on equal footing. That allowed the CDC to develop “ensemble” forecasts—made through combining different models—targeted at helping prepare for future demands in hospital services.

“This has improved the actionability and evaluation of these forecasts, which are incredibly useful for understanding where healthcare resource needs may be increasing,” Johansson writes in an e-mail.

Meyers’ initial Covid projections were based on simulations she and her team at the University of Texas, Austin, had been working on for more than a decade, since the 2009 H1N1 flu outbreak. They had created online tools and simulators to help the state of Texas plan for the next pandemic. When Covid-19 hit, Meyers’ team was ready to spring into action.

“The moment we heard about this anomalous virus in Wuhan, we went to work,” says Meyers, now the director of the UT Covid-19 Modeling Consortium. “I mean, we were building models, literally, the next day.”

Researchers can lead policy-makers to mathematical models of the spread of a disease, but that doesn’t necessarily mean the information will result in policy changes. In the case of Austin, however, Meyers’ models helped convince the city of Austin and Travis County to issue a stay-at-home order in March of 2020, and then to extend it in May.

The Austin area task force came up with a color-coded system denoting five different stages of Covid-related restrictions and risks. Meyers’ team tracks Covid-related hospital admissions in the metro area on a daily basis, which forms the basis of that system. When admission rates are low enough, lower “stage” for the area is triggered. Most recently, Meyers worked with the city to revise those thresholds to take into account local vaccination rates.

But sometimes model-based recommendations were overruled by other governmental decisions.

In spring 2020, tension emerged between locals in Austin who wanted to keep strict restrictions on businesses and Texas policy makers who wanted to open the economy. This included construction work, which the state declared permissible.

Because of the nature of the job, construction workers are often in close contact, heightening the threat of viral exposure and severe disease. In April 2020, Meyers’ group’s modeling results showed that the Austin area’s 500,000 construction workers had a four-to-five times greater likelihood of being hospitalized with Covid than people of the same age in different occupational groups.

The actual numbers from March to August turned out strikingly similar to the projections, with construction workers five times more likely to be hospitalized, according to Meyers and colleagues’ analysis in JAMA Network Open.

“Maybe it would have been even worse, had the city not been aware of it and tried to try to encourage precautionary behavior,” Meyers says. “But certainly it turned out that the risks were much higher, and probably did spill over into the communities where those workers lived.”

Some researchers like Meyers had been preparing for their entire careers to test their disease models on an event like this. But one newcomer quickly became a minor celebrity.

Youyang Gu, a 27-year-old data scientist in New York, had never studied disease trends before Covid, but had experience in sports analytics and finance. In April of 2020, while visiting his parents in Santa Clara, California, Gu created a data-driven infectious disease model with a machine-learning component. He posted death forecasts for 50 states and 70 other countries at covid19-projections.com until October 2020; more recently he has looked at US vaccination trends and the “path to normality.”

While Meyers and Shaman say they didn’t find any particular metric to be more reliable than any other, Gu initially focused only on the numbers of deaths because he thought deaths were rooted in better data than cases and hospitalizations. Gu says that may be a reason his models have sometimes better aligned with reality than those from established institutions, such as predicting the surge in in the summer of 2020. He isn’t sure what direct effects his models have had on policies, but last year the CDC cited his results.

Today, some of the leading models have a major disagreement about the extent of underreported deaths. The IHME model made a revision in May of this year, estimating that more than 900,000 deaths have occurred from Covid in the U.S., compared with the CDC number of just under 600,000. IHME researchers came up with the higher estimate by comparing deaths per week to the corresponding week in the previous year, and then accounting for other causes that might explain excess deaths, such as opioid use and low healthcare utilization. IHME forecasts that by September 1, the U.S. will have experienced 950,000 deaths from Covid.

This new approach contradicts many other estimates, which do not assume that there is such a large undercount in deaths from Covid. This is another example of how models diverge in their projections because different assumed conditions are built into their machinery.

Covid models are now equipped to handle a lot of different factors and adapt in changing situations, but the disease has demonstrated the need to expect the unexpected, and be ready to innovate more as new challenges arise. Data scientists are thinking through how future Covid booster shots should be distributed, how to ensure the availability of face masks if they are needed urgently in the future, and other questions about this and other viruses.

“We're already hard at work trying to, with hopefully a little bit more lead time, try to think through how we should be responding to and predicting what COVID is going to do in the future,” Meyers says.