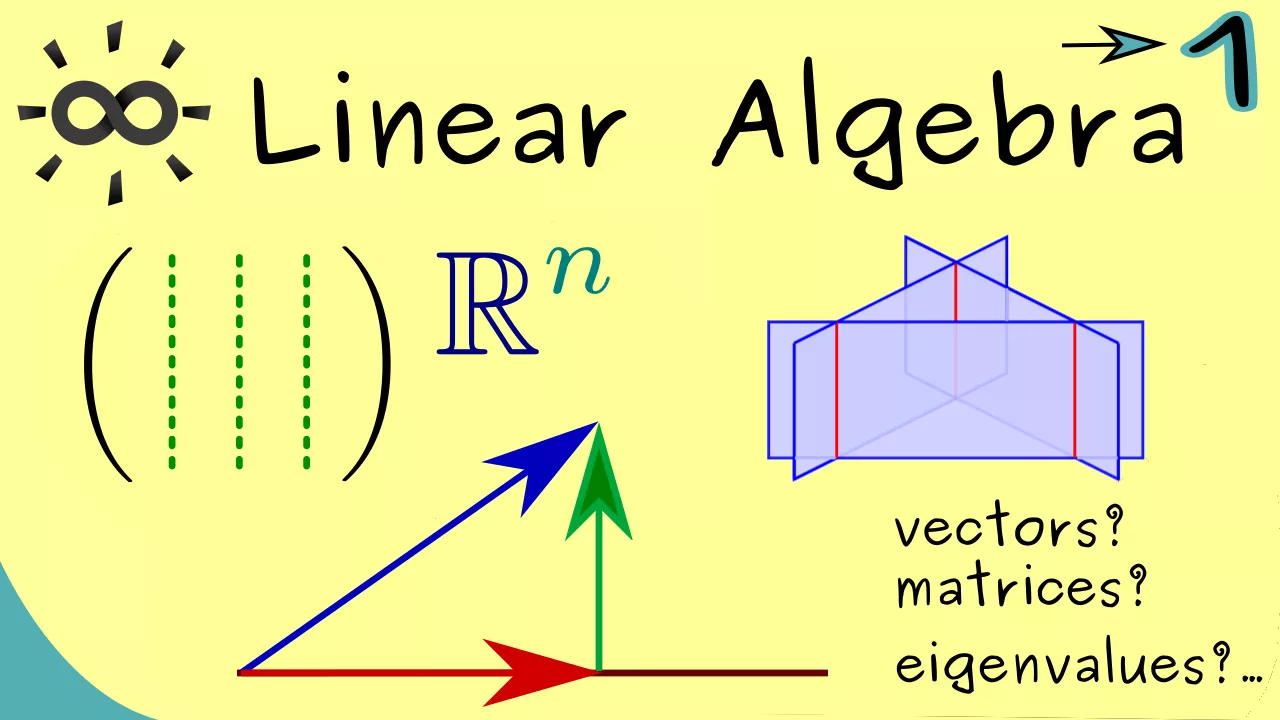

Linear Algebra: Foundations and Applications

Linear algebra is a branch of mathematics that studies vectors, vector spaces, linear transformations, and systems of linear equations.

published : 05 April 2024

Linear algebra is a branch of mathematics that studies vectors, vector spaces, linear transformations, and systems of linear equations. It provides a powerful framework for solving a wide range of problems in mathematics, science, engineering, and computer science.

Basic Concepts

At the heart of linear algebra are vectors and matrices, which are mathematical objects that represent quantities with magnitude and direction. Vectors can be added together, scaled by a scalar, and multiplied by a matrix to produce new vectors.

Matrices are rectangular arrays of numbers that can be used to represent linear transformations and systems of linear equations. They can be added together, multiplied, and inverted to solve equations and analyze transformations.

Applications

Linear algebra has applications across diverse fields, including physics, engineering, computer graphics, and machine learning. In physics, linear algebra is used to describe the motion of objects, analyze electromagnetic fields, and solve differential equations.

In engineering, linear algebra is used to model and analyze electrical circuits, control systems, and structural mechanics. In computer graphics, linear algebra is used to represent and manipulate geometric objects, render images, and simulate physical phenomena.

Foundations

The study of linear algebra is based on a set of fundamental concepts and theorems, including vector spaces, linear independence, basis and dimension, eigenvalues and eigenvectors, and inner product spaces.

Vector spaces are sets of vectors that satisfy certain properties, such as closure under addition and scalar multiplication. Linear independence refers to the property of vectors that ensures no vector in a set can be written as a linear combination of the others.

Applications in Machine Learning

Linear algebra plays a crucial role in machine learning, a subfield of artificial intelligence that focuses on developing algorithms and models that can learn from and make predictions based on data. In machine learning, vectors are used to represent data points, and matrices are used to represent transformations and models.

Linear algebra techniques such as matrix factorization, singular value decomposition (SVD), and principal component analysis (PCA) are used to extract meaningful patterns and features from data, reduce dimensionality, and train predictive models.

Conclusion

Linear algebra provides a powerful toolkit for solving problems in mathematics, science, engineering, and computer science. By studying vectors, matrices, and linear transformations, researchers gain insights into the structure and behavior of complex systems and develop tools and techniques for solving real-world problems.

As we continue to explore the depths of linear algebra and its applications, let us remember the importance of its foundations in understanding the fundamental principles and concepts that underlie the mathematics of vectors and matrices.